Workshop 6 - Smoothing and Density Estimation

- Smoothing a data set to extract a smooth trend using

ksmoothandloess - Add curves to a plot using the

linesfunction, and shaded regions usingpolygon - Add a smooth trend and confidence interval to a scatterplot

- Estimate a smooth density function of a single variable using the

densityfunction. - Add smooth densities to histograms, and draw violin plots and ridgeline plots

- Make a 2-D density estimate, and visualise it using

contours and a heat map with theimagefunction.

You will need to install the following packages for today’s workshop:

ggplot2for the violin plotggridgesfor the ridgeline plot

install.packages(c("ggplot2","ggridges"))1 Smoothing

Smoothing is a very powerful technique used all across data analysis. Other names given to this technique are curve fitting and low pass filtering. It is designed to detect trends in the presence of noisy data where the shape of the trend is unknown. The smoothing name comes from the fact that to accomplish this feat, we assume that the trend is smooth, as in a smooth surface. In contrast, the noise, or deviation from the trend, is unpredictably wobbly. The justification for this is that conditional expectations or probabilities can be thought of as trends of unknown shapes that we need to estimate in the presence of uncertainty.

Download data: UN

The data we’ll use to explore smoothing is the UN data

set comprising various measures of national statistics on health,

welfare, and education for 213 countries and other areas from 2009-2011,

as reported by the UN. Let’s consider the relationship between the

wealth of the country (as measured by the GDP per person, in 1000s US

dollars) and infant mortality (defined as deaths by age 1 year per 1000

live births).

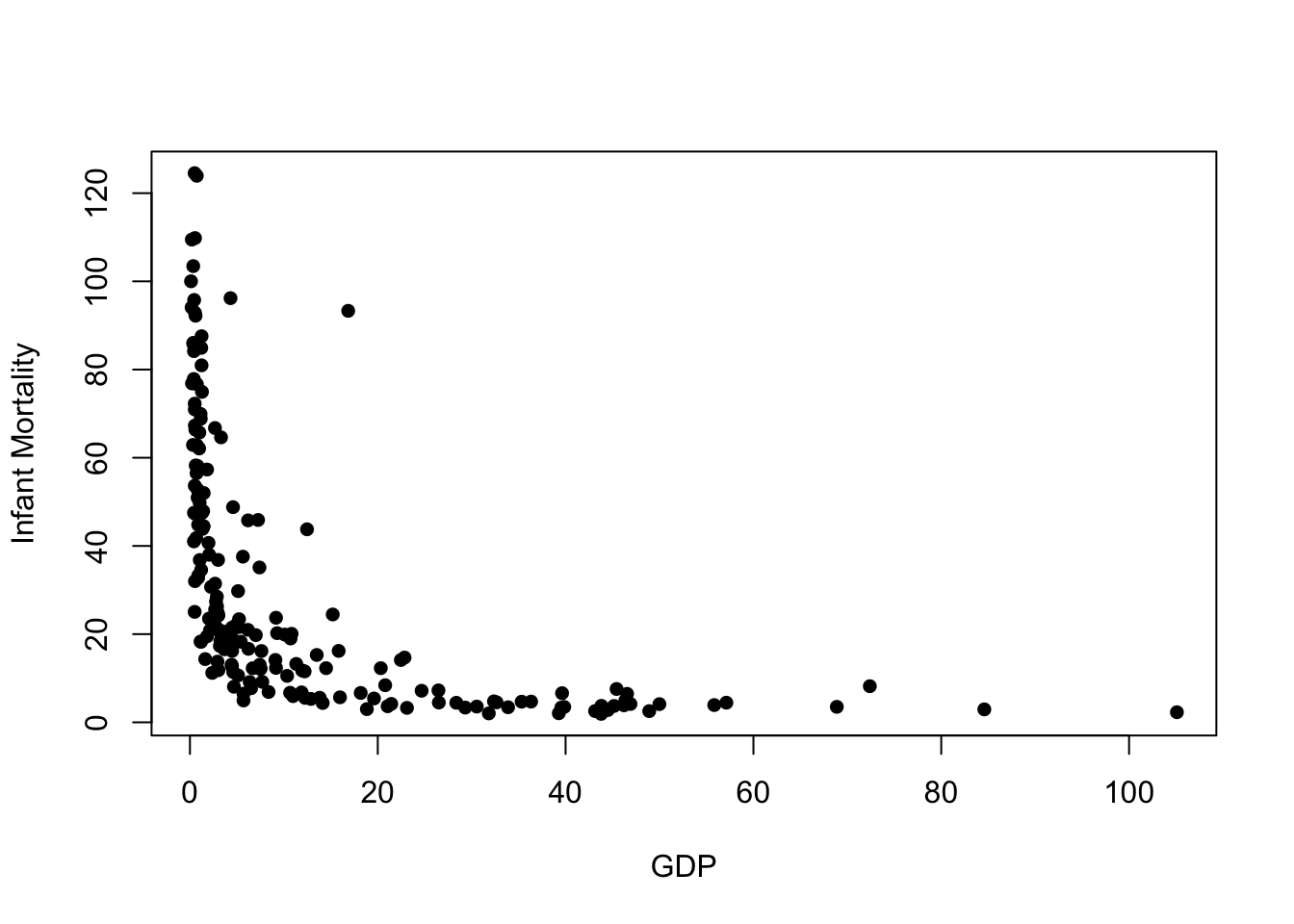

plot(x=UN$ppgdp, y=UN$infantMortality, xlab="GDP", ylab="Infant Mortality", pch=16)

We quite clearly see a strong relationship here, but it is far from linear. Poorer countries have higher levels of infant mortality, and wealthier countries have lower mortality rates. We also seee that beyond a point, further increases in GDP do not result in further reduction in mortality, and similarly the very poor countries have higher, and much more variable, infant mortality rates. We also notice one possible outlier with a very high infant mortality compared to other countries of similar GDP (this turns out to be Equatorial Guinea).

1.1 Moving average

The general idea of a moving average is to group data points into a local neighbourhood in which the value of the trend is assumed to be constant, which we estimate by the average of these nearby points. Then as we move along the x-axis, our neighbourhood and hence the estimate of the trend will change.

ksmooth function using

a "box" kernel and a bandwidth to govern the

size of the neighbourhood as follows:

fit <- ksmooth(x=UN$ppgdp, y=UN$infantMortality, kernel = "box", bandwidth = 5)x and

y corresponding to our estimates of the smooth trend

(y) at the corresponding values of GDP (x). We

now just need to add this to our plot.

We can use R’s line drawing functions to add curves to a

plot via the lines function, which takes a vector of

x and y values and draws straight-line

segments connecting then on top of the current

plot.

If we want to add simple straight lines to lines to plots, then we

can use the simpler abline function. abline

can be used in three different ways:

- Draw a horizontal line: pass a value to the

hargument,abline(h=3)draws a horizontal line at \(y=3\) - Draw a vertical line: pass a value to the

cargument,abline(v=5)draws a vertical line at \(x=5\) - Draw a line with given intercept and slope: pass value to the

aandbarguments representing the intercept and slope respectively;abline(a=1,b=2)draws the line at \(y=1+2x\)

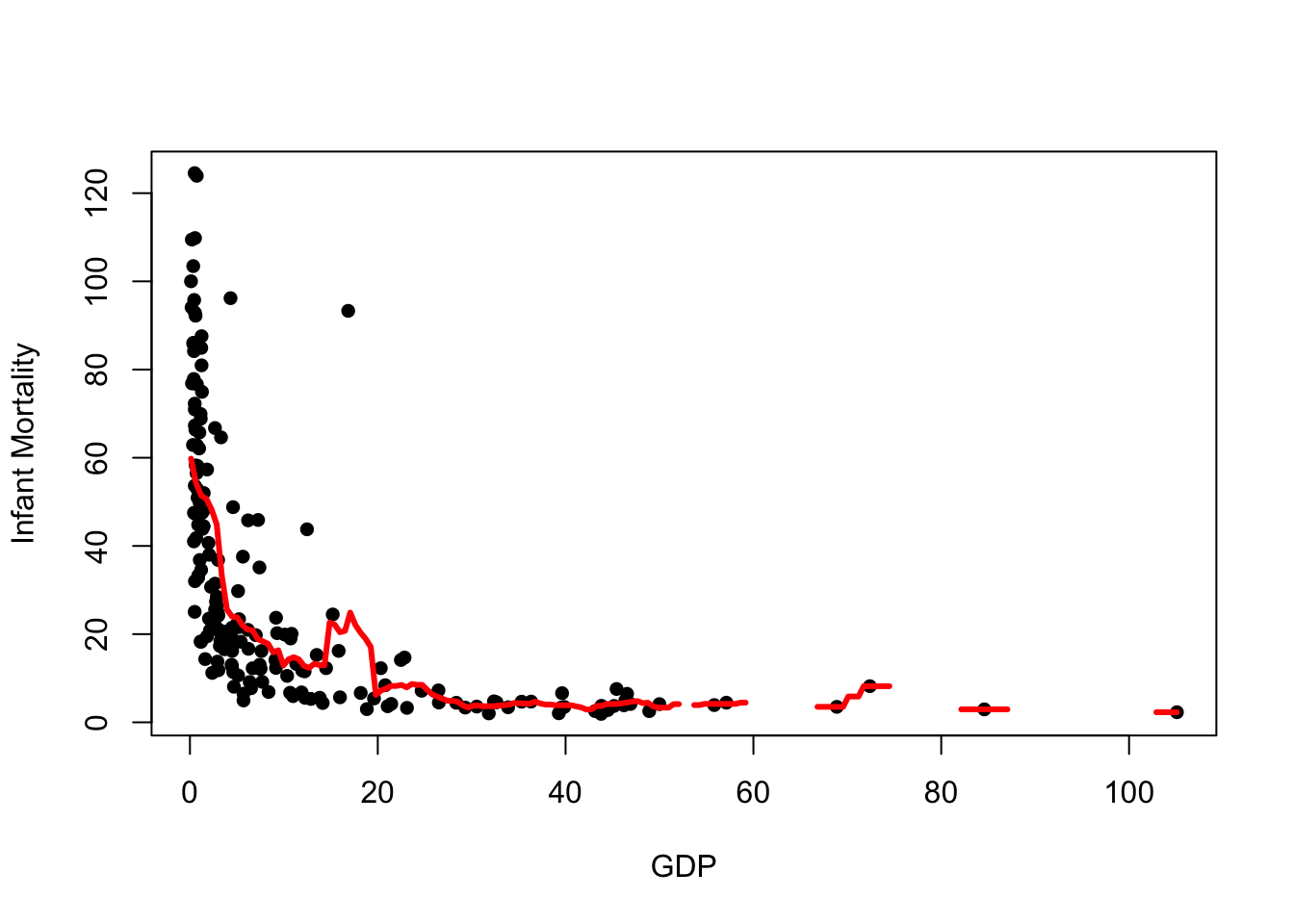

Let’s add our moving average trend to the scatterplot

plot(x=UN$ppgdp, y=UN$infantMortality, xlab="GDP", ylab="Infant Mortality", pch=16)

lines(x=fit$x, y=fit$y, col="red", lwd=3)

While it mostly follows the shape of the data, there are some obvious issues:

- The trend is still quite noisy, and not quite the smooth curve we might expect

- There are gaps in the trend - this is where our data points are so far apart that we have no data points in the neighbourhood to produce an average

- The moving average is very sensitive to extremes and outliers. Notice how the trend is ‘pulled upwards’ by a few large mortality values at the left of the plot.

- Repeat the commands above but increase the bandwidth.

- Add your new smooth trend to the plot using a different colour of line.

- How does the outlier affect the shape of the smooth?

1.2 Kernels

Kernel smoothers replace a moving average of nearby points with a weighted average of all the data, with nearer points having more contribution than more distant points.

We can fit the kernel smoother using the same ksmooth

function, but now we must change the kernel argument. This

smoother only supports the normal (Gaussian) or

box (Uniform) kernel functions.

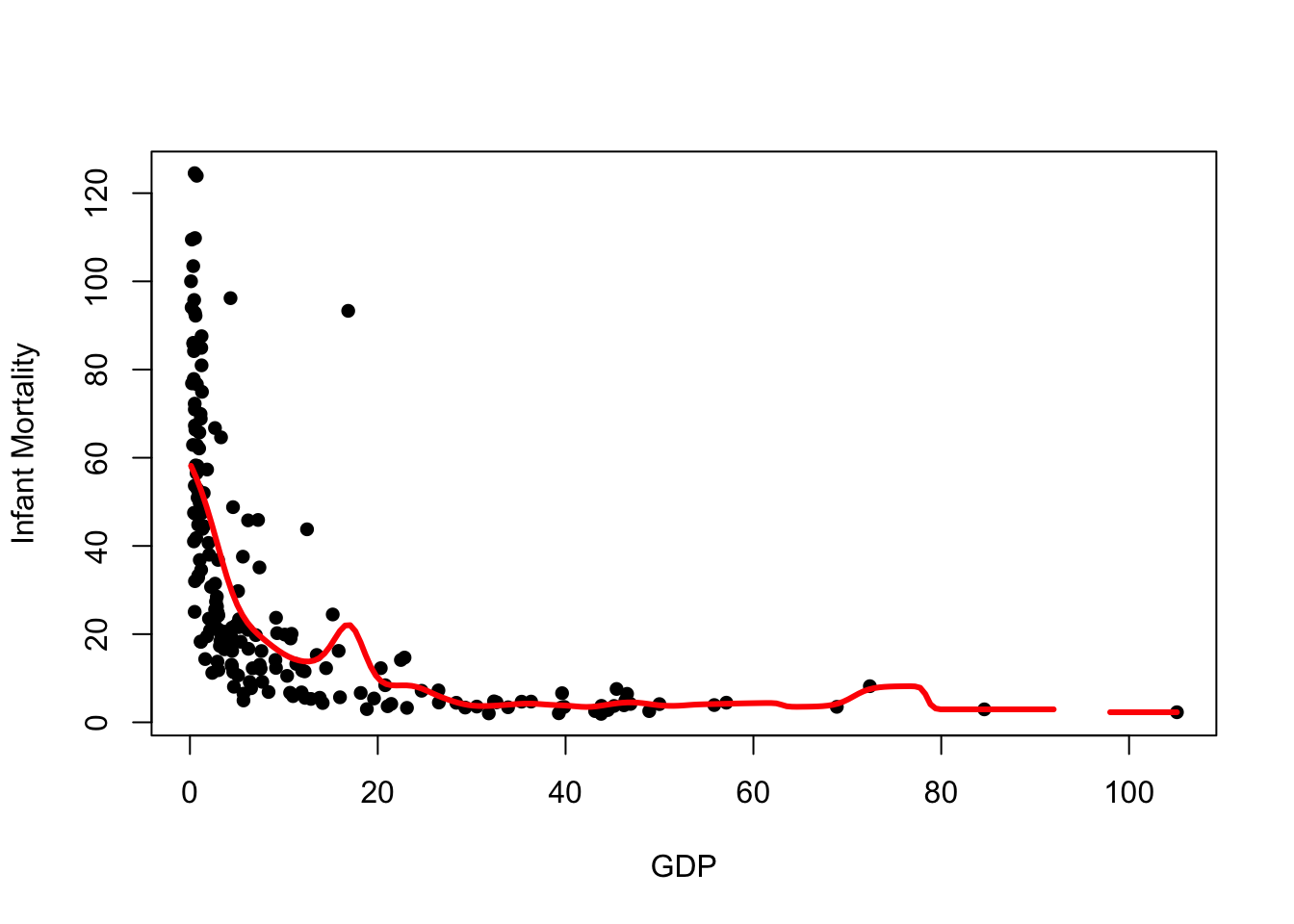

fit <- ksmooth(x=UN$ppgdp, y=UN$infantMortality, kernel = "normal", bandwidth = 5)

plot(x=UN$ppgdp, y=UN$infantMortality, xlab="GDP", ylab="Infant Mortality", pch=16)

lines(x=fit$x, y=fit$y, col="red", lwd=3)

We can see a number of notable changes here:

- The trend is much smoother

- We still have a gap where our data are sparse - the bandwidth is still too small

- There is a bump in the trend near the outlier we identified earlier,

and a bump around

GDP=75where our data are sparse and influenced by one larger value

Overall, this looks to be doing a better job, but still rather susceptible to extreme values.

1.3 Local regression and Loess

Local regression methods work in a similar way to our kernel smoother above, only instead of estimating the trend by a local mean weighting by nearby data we fit a linear regression using a similar weighting. We typically need to use a much larger bandwidth to have enough points to adequately estimate the regression line, but the results are generally closer to a more stable and smooth trend function.

The technique we have looked at is achieved using the

loess function, which takes a span argument to

represent the proportion of the entire data set used to estimate the

trend. This span plays the same role as the

bandwidth parameter does.

It’s a little more work to add the loess smoother to our plots, as we

must separately fit the model and then predict the

trend at some new locations (the ksmooth did both jobs for

us). However,

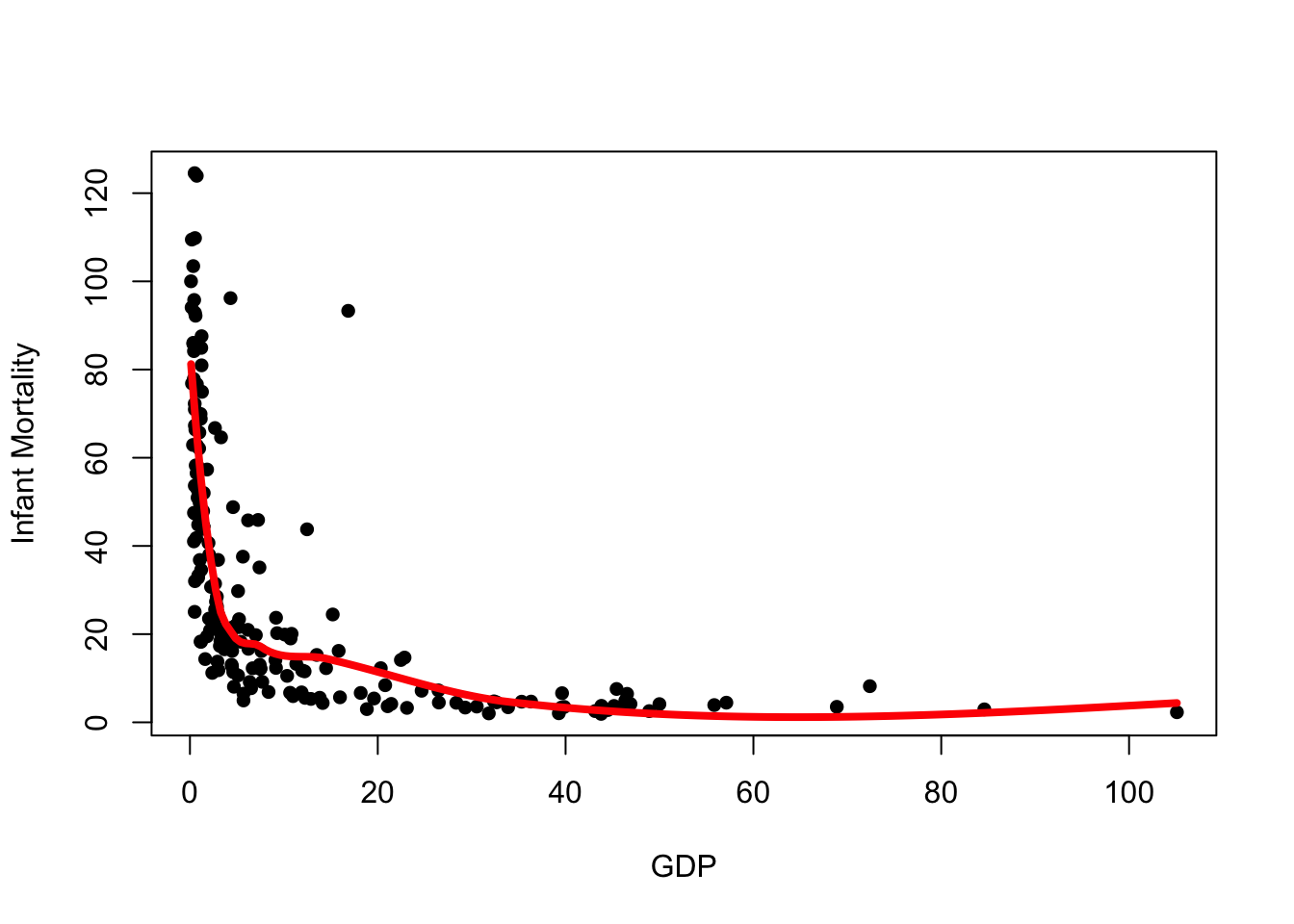

## fit the model, using 2/3 of the data

lfit <- loess(infantMortality~ppgdp, data=UN, span=0.66)

## create a sequence of points to cover the x-axis

xs <- seq(min(UN$ppgdp), max(UN$ppgdp), length=200)

## get the predicted trend at the new locations, and the error too

lpred <- predict(lfit, data.frame(ppgdp=xs), se = TRUE)

## redraw the plot

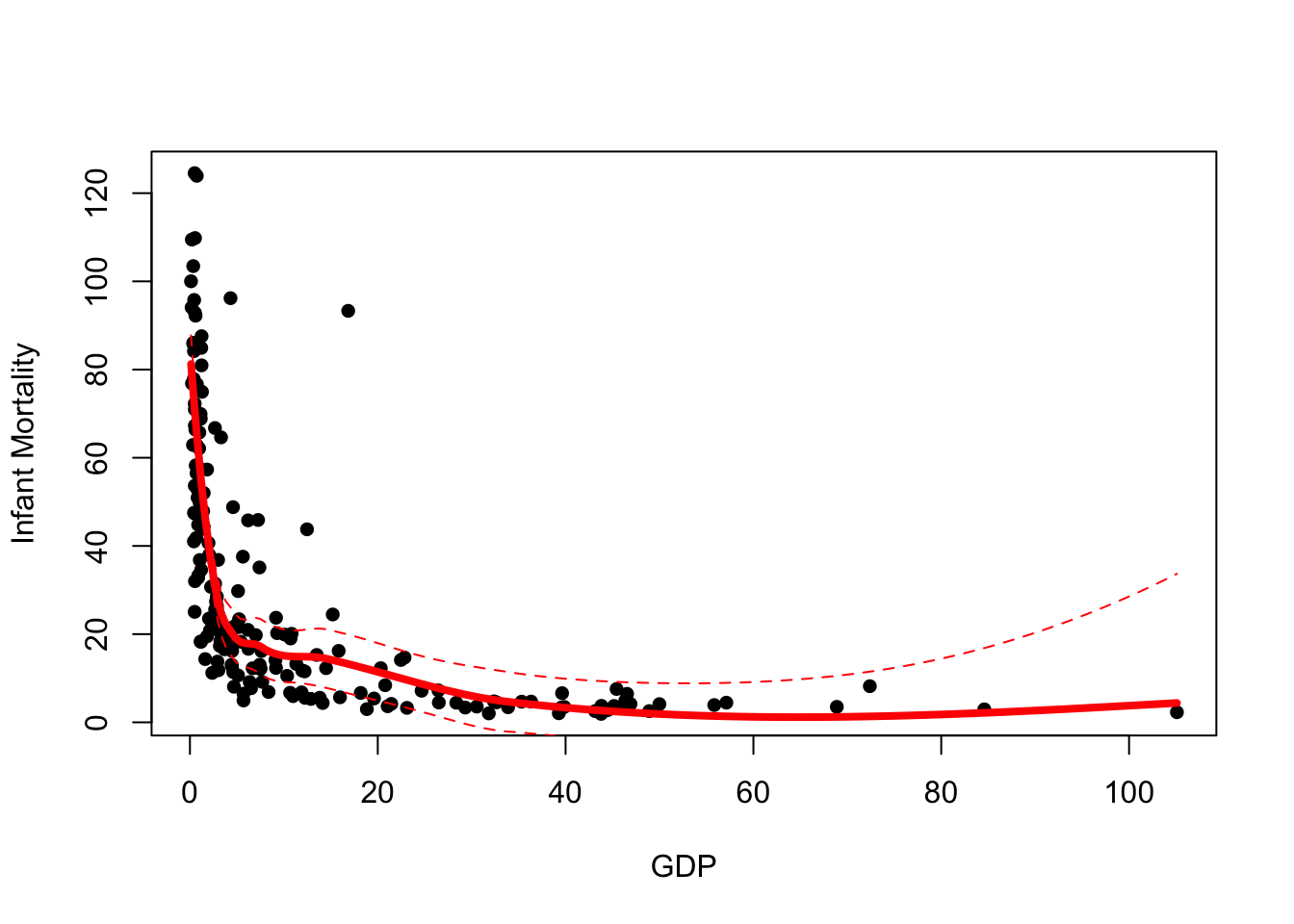

plot(x=UN$ppgdp, y=UN$infantMortality, xlab="GDP", ylab="Infant Mortality", pch=16)

## add the loess prediction

lines(x=xs, y=lpred$fit, col='red',lwd=4)

We see the loess curve is much smoother and better behaved, however it is still influenced by the outliers in the data.

Since the loess curve is built upon ideas of regression, we can use

regression theory to extact confidence intervals for our loess trend.

The predict function here returns a list with many

elements. We have used fit to get the predicted trend

lines, but we can use the se.fit component to build an

approximate confidence interval.

To do this, we’ll create a vector for the upper and lower limits of

the confidence interval as \(\hat{y}\pm

1.96\times \text{SE}(\hat{y})\) and use lines to add

these limits to our plot:

upr <- lpred$fit + 1.96*lpred$se.fit ## upper limit of 95% CI

lwr <- lpred$fit - 1.96*lpred$se.fit ## lower limit of 95% CI

plot(x=UN$ppgdp, y=UN$infantMortality, xlab="GDP", ylab="Infant Mortality", pch=16)

lines(x=xs, y=lpred$fit,col='red',lwd=4)

lines(x=xs, y=upr,col='red',lwd=1, lty=2)

lines(x=xs, y=lwr,col='red',lwd=1, lty=2)

A couple of things to note here:

- These confidence intervals are intervals for the location of the trend, not for the data. We do not expect most of our data to be between these limits.

- Notice how our uncertainty grows to the right of the plot where we have fewest data.

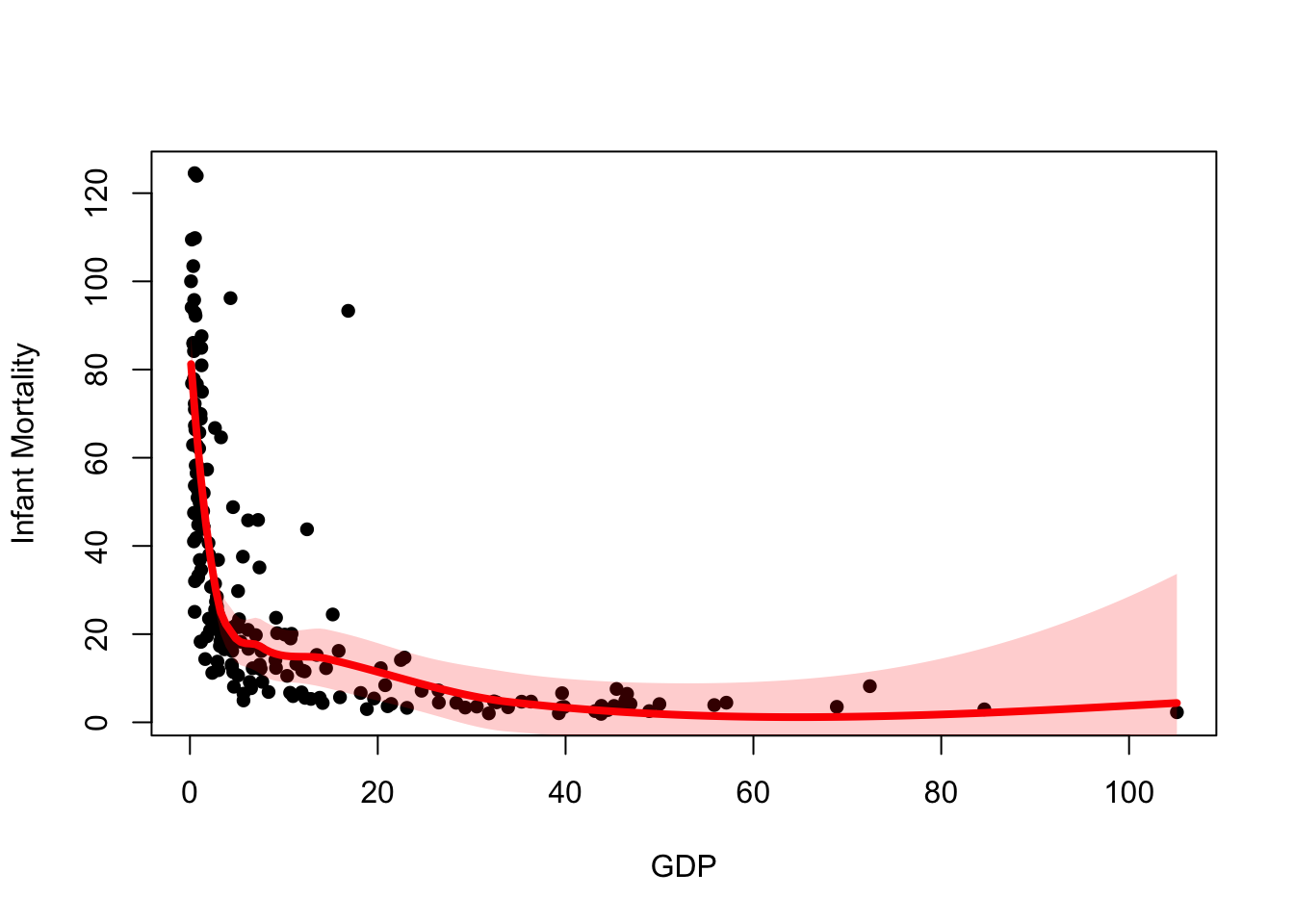

A nicer way to represent the confidence interval is by using a shaded region. Again, this is possible from the information we have but requires a little more work.

The polygon function can be used to draw shaded regions

on an existing plot. The default syntax is:

polygon(x, y, col=NA, border=NULL)

- The

xandyarguments take the \((x,y)\) coordinates of the shape to draw. - The default for

colour draws no fill, so it is advisable to set a colour here. - The default

borderoutlines the region with a black solid line. To disable the border, set this toNA.

We can use our confidence limits to draw the polygon, however we need to combine the points before calling polygon.

plot(x=UN$ppgdp, y=UN$infantMortality, xlab="GDP", ylab="Infant Mortality", pch=16)

lines(x=xs, y=lpred$fit,col='red',lwd=4)

## we need 'scales' to use the alpha function for transparency

library(scales)

polygon(x=c(xs,rev(xs)),

y=c(upr,rev(lwr)),

col=alpha('red',0.2), border=NA)

Here we defined y as c(upr,rev(lwr)),

i.e. the upper CI followed by the reversed lower CI. This

is because polygon joins successive points together, so we must reverse

the order of one of the vectors so we can draw around the shape

correctly. If you’re unsure why this is needed, repeat the above command

and remove the calls to the rev function in x

and y.

1.4 Data set 1: Academic salaries

Download data: Salaries

The Salaries data set contains the 2008-09 nine-month

academic salary for Assistant Professors, Associate Professors and

Professors in a University in the USA. The data were collected as part

of the on-going effort of the college’s administration to monitor salary

differences between male and female faculty members. Let’s take a look

at two variables in particular - salary, and

yrs.since.phd - the number of years since they completed

their PhD, as a measure of “experience”. Let’s take a look at the

data!

- Take a look at the data with

headorViewto familiarise yourself with the variables. - Draw a scatterplot of the

yrs.since.phdandsalary, withsalaryon the vertical axis. - What do you conclude about the relationship? Does it agree with what you expected?

- For each of the three types of smoother (simple moving average, a kernel smoother, and a loess fit), and add them to your plot as a different coloured lines. Experiment with different bandwidth values and spans.

- How do your different smoothers compare?

- Redraw the scatterplot, and add your loess curve as a solid line.

- Follow the steps above to add the confidence interval to your plot - either as parallel lines, or as a shaded area, or both!

- Use

lmto fit a simple linear regression of the formsalary~yrs.since.phd, and add this to the plot. Is this consistent with your smoothed trend and its confidence interval?

- There are a couple of categorical variables in this data set - the

sexof the academic, and theirrank- one ofProf,AssocProforAsstProfrepresenting Professor, Associate Professor, and Assistant Professor (in descending order of seniority). - Use colour to highlight the different sexes - what do you conclude?

- Use colour to highlight the different ranks - what do you see? What do you think about your trend now?

2 Density Estimation

Kernel density estimation (KDE) is a nonparametric method which uses kernels (as we’ve seen above) to estimate the probability density function of a continuous random variable. In other words, we are trying to produce a smoothed version of a histogram.

A density estimate is relatively easy to construct using the

density function in R. It takes a few useful arguments:

x- the databw- the bandwidth parameter. If not specified, R will try and determine a value for itself.kernel- the kernel function to use. Options includegaussian,rectangular,triangular,epanechnikov. Default isgaussian.

x and y

components that we can plot or add to a plot with

lines.

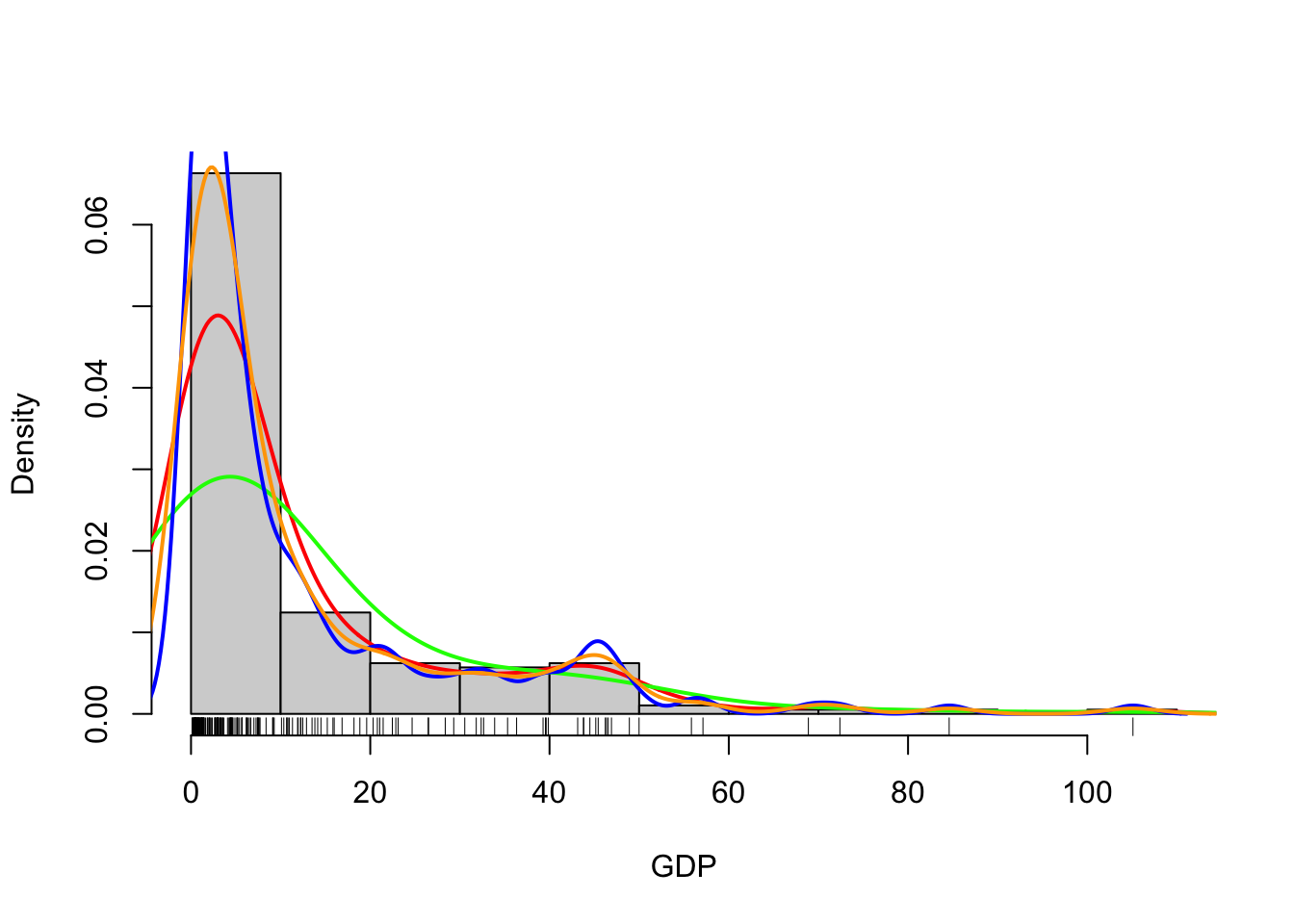

## draw a histogram of GDP on density scale

hist(UN$ppgdp, freq=FALSE,main='',xlab='GDP')

## add a rugplot to see where the points lie

rug(UN$ppgdp)

## a density estimate with Gaussian kernel and bandwidth of 5

d1 <- density(UN$ppgdp, bw=5)

lines(x=d1$x, y=d1$y, col='red', lwd=2)

## bandwidth of 10

d2 <- density(UN$ppgdp, bw=10)

lines(x=d2$x, y=d2$y, col='green', lwd=2)

## bandwidth of 2

d3 <- density(UN$ppgdp, bw=2)

lines(x=d3$x, y=d3$y, col='blue', lwd=2)

## let R choose a bandwidth

d4 <- density(UN$ppgdp)

lines(x=d4$x, y=d4$y, col='orange', lwd=2)

R does a reasonable job at guessing a not-too-stupid bandwidth

parameter. To estimate the bandwidth, R calls the bw.nrd0

function on the data - read the help file if you want to know more.

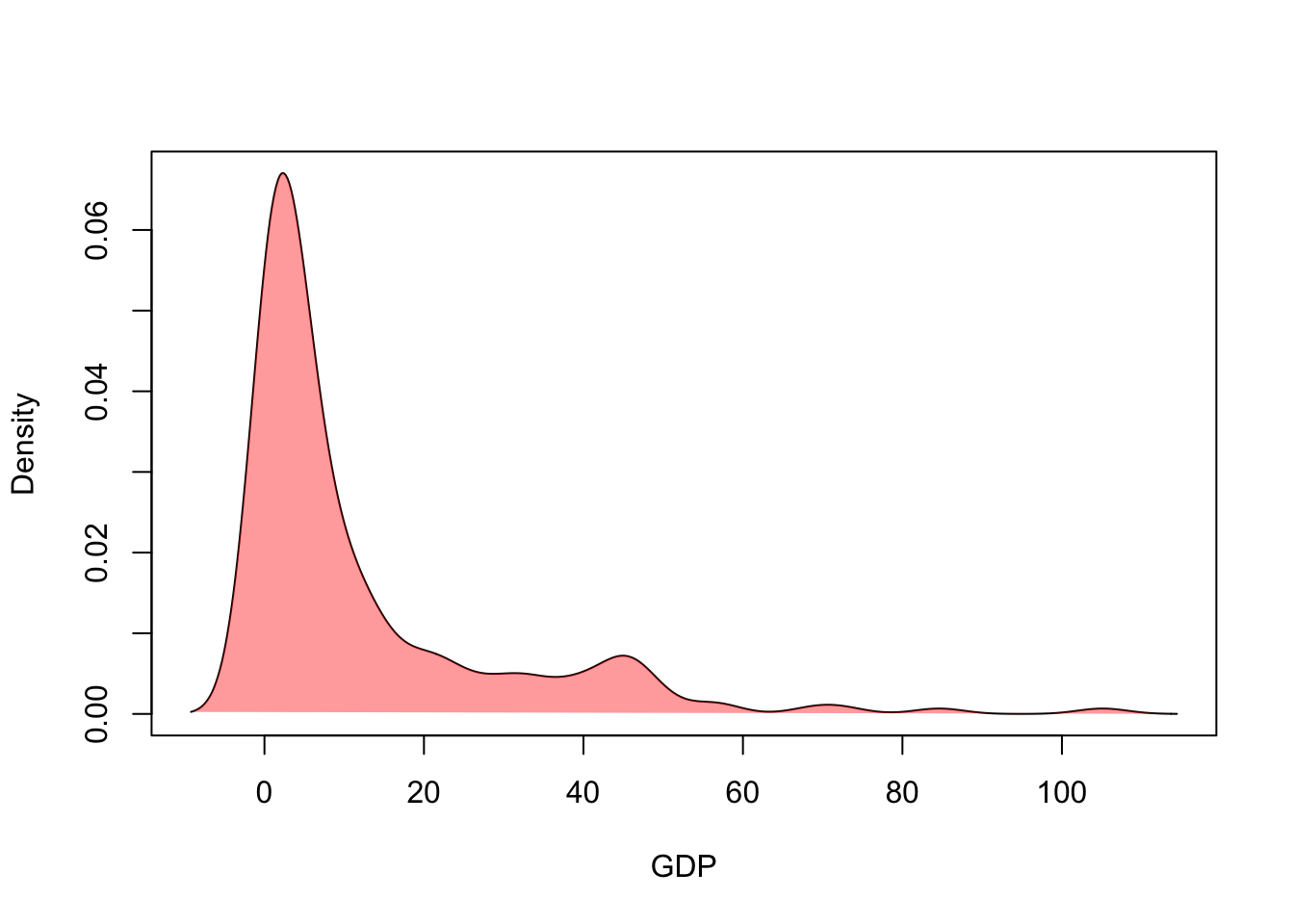

Alternatively, we could skip the histogram altogether and just draw the density estimate:

## draw the line

plot(x=d4$x, y=d4$y,xlab='GDP',ylab='Density',ty='l')

## and add some fill to make it prettier

polygon(x=d4$x, y=d4$y, border=NA, col=alpha('red',0.4))

This can be a helpful tool for comparing the densities from different groups in a single plot.

2.1 Violin plots

The violin plot is a combination of ideas of a boxplot and the kernel density estimate above. Rather than drawing the crude box-and-whiskers of a boxplot, we instead draw a back-to-back density estimate.

Download data: chickwts

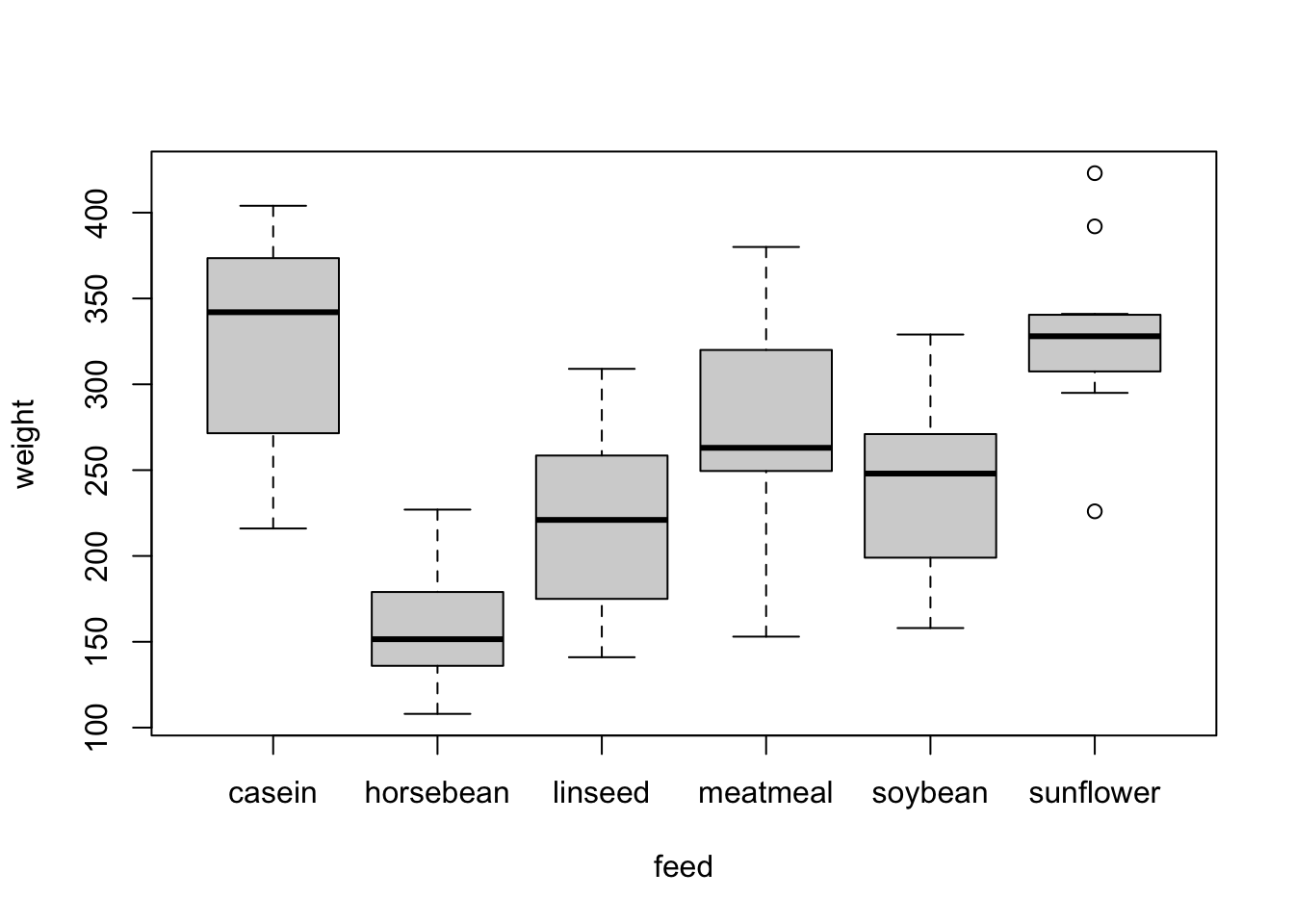

To illustrate, this consider the chickwts data set,

which records the weight of 71 chicks fed on a six different feed

supplements. Newly-hatched chicks were randomly allocated into six

groups, and each group was given a different feed supplement. They were

then weighed after six weeks. A separate boxplot can be drawn for each

level of a categorical variable using the following code (not again the

use of the linear model formula notation)

boxplot(weight~feed,data=chickwts)

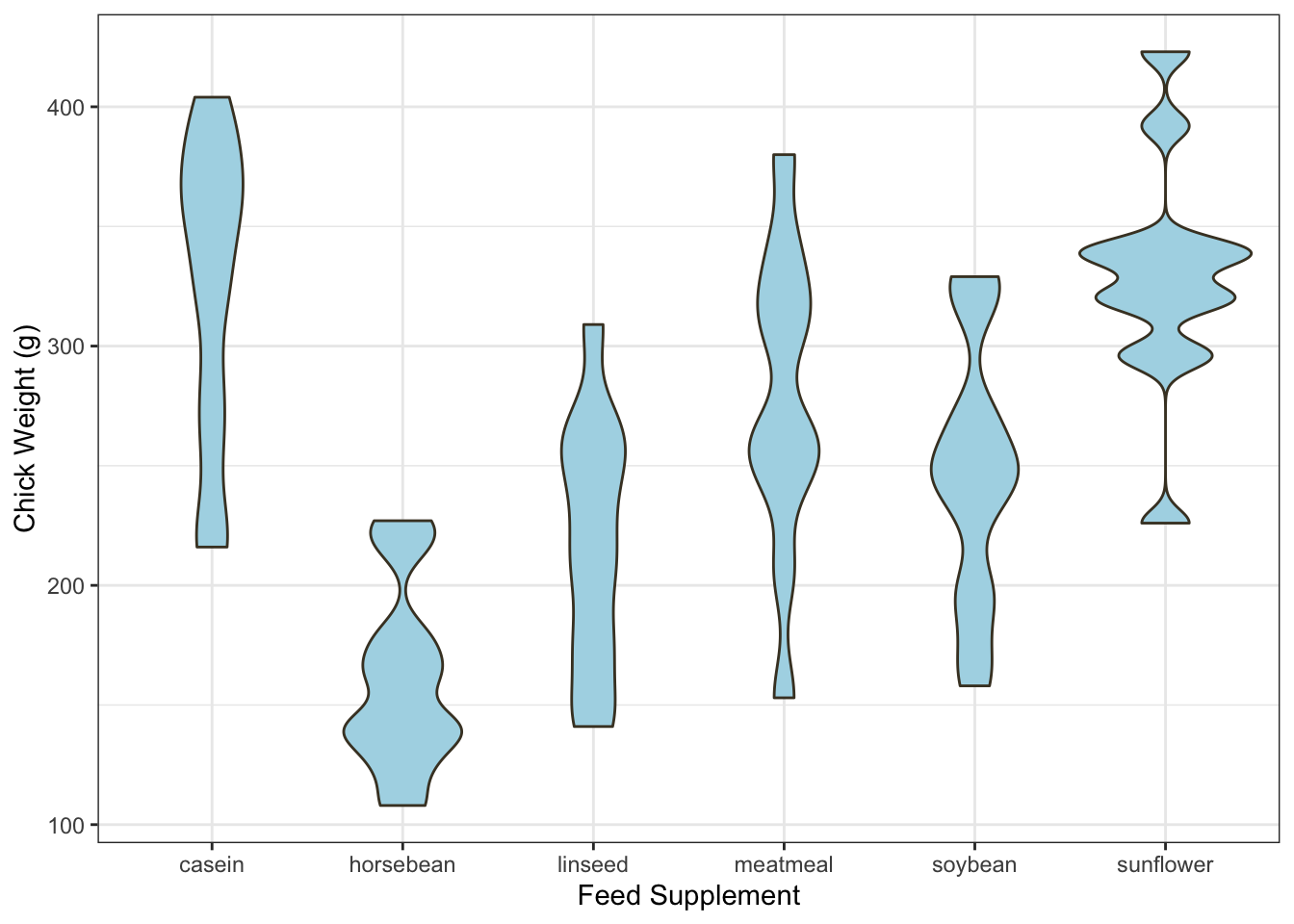

A violin plot takes the same layout of the boxplot, but draws a (heavily) smoothed density estimate instead of the box and whiskers.

library(ggplot2)

ggplot(chickwts,

aes(x = factor(feed), y = weight)) +

geom_violin(fill = "lightBlue", color = "#473e2c", adjust=0.5) +

labs(x = "Feed Supplement", y = "Chick Weight (g)")

Violin plots can be quite effective at conveying the information of

both a histogram and a boxplot in one graphic. As with most density

estimates, experimenting with the bandwidth is usually a good idea - in

this case the adjust parameter.

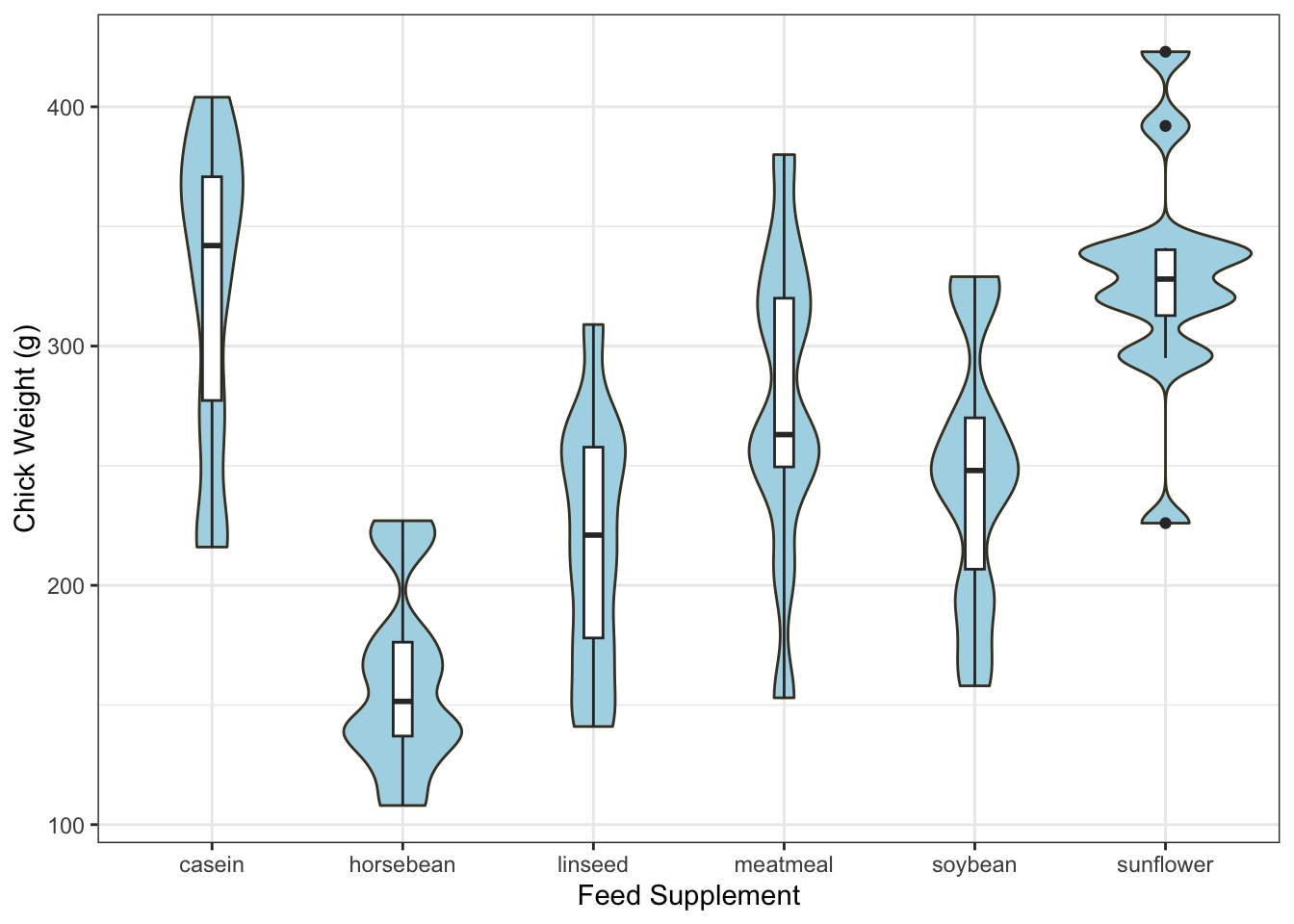

Finally, the plotting code for making a violin plot is a lot more

involved than usual! ggplot is a powerful, but alternative,

method of plotting in R. We’ve generally avoided it as it uses a

fundamentally different plotting system to our regular graphics and

takes rather more work to understand. However, it is exceptionally

flexible and with it you can do many things that would be difficult

otherwise, for instance:

library(ggplot2)

ggplot(chickwts,

aes(x = factor(feed), y = weight)) +

geom_violin(fill = "lightBlue", color = "#473e2c", adjust=0.5) +

labs(x = "Feed Supplement", y = "Chick Weight (g)") +

geom_boxplot(width=0.1)

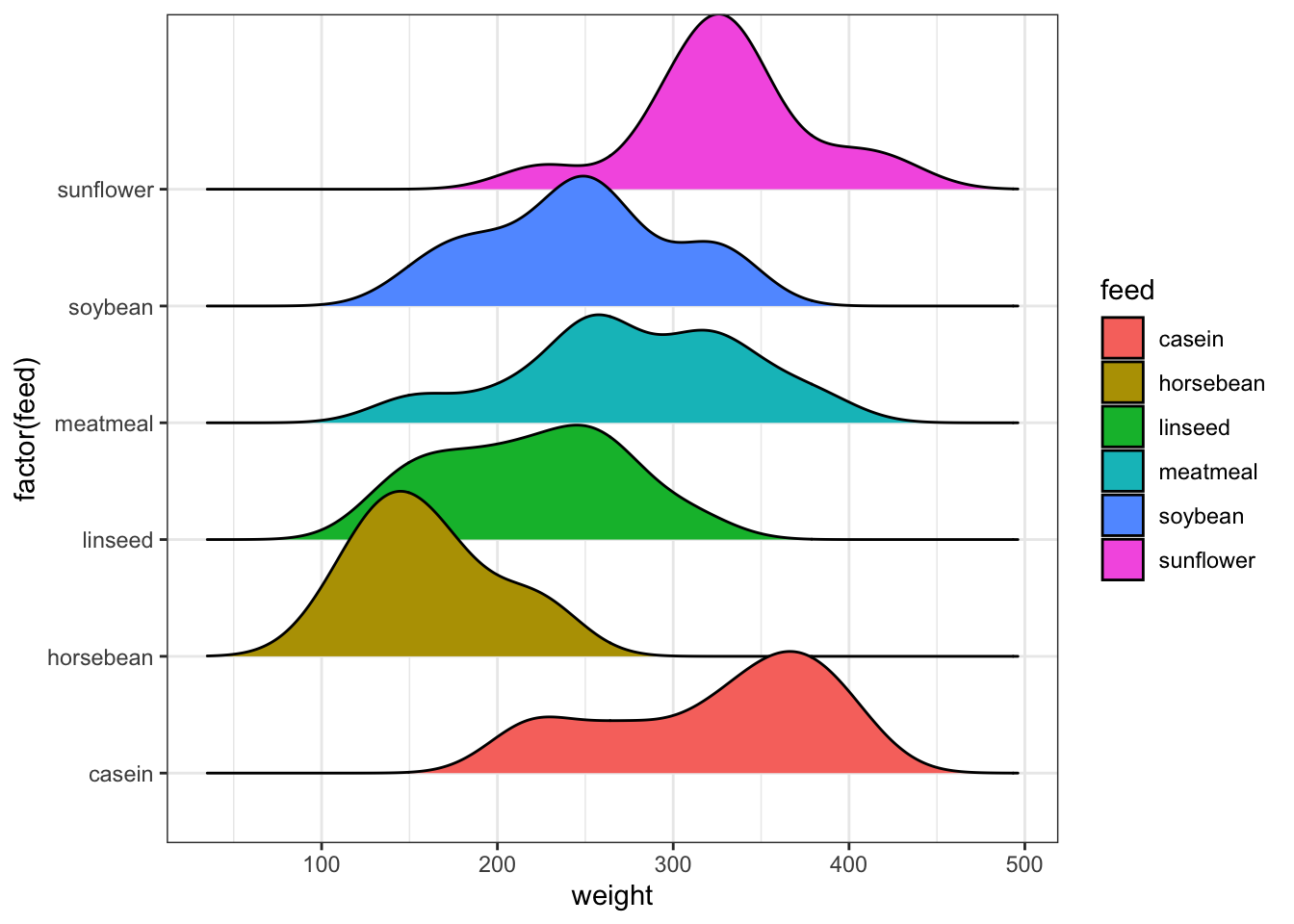

2.2 Ridgeline plots

Ridgeline plots, also called ridge plots, are another way to show density estimates for a number of groups. Much like the violin plot, we draw a separate density estimate for each group, but in the ridge line plot, the densities are stacked vertically to look like a line of hills or ridges (hence the name).

Again, this uses the gg family of plotting functions,

but can easily be adapted. The main control parameter here is the

scale which affects the height of the individual

densities:

library(ggridges)

ggplot(chickwts, aes(weight, y=factor(feed), fill=feed)) +

geom_density_ridges(scale = 1.5)

2.3 Data set 2: Diamonds & Price

Download data: diamonds

The diamonds data set contains information on the prices

and other attributes of 53,940 diamonds. In particular, it gives the

weight (carat) and price of each diamond, as

well as categorical variables representing the quality of the

cut (Fair, Good, Very Good, Premium, Ideal), the diamond

color (from D=best, to J=worst), and clarity

representing how clear the diamond is (I1 (worst), SI2, SI1, VS2, VS1,

VVS2, VVS1, IF (best)).

- Explore the distribution of the

caratof the diamonds. Start with a histogram and experiment with different numbers of bars. - Now make some density estimates and experiment with different bandwidths to produce a smoothed version of your histogram. Add these to your plot.

- What about the distribution of prices?

- How many diamonds are there of the different

cuts? - How does the distribution of prices change according to the

cutof the diamond?- Try drawing boxplots for each level of

cut. - How do these compare to violin plots? And ridgeline plots?

- Do you find any evidence of different behaviour amond the groups?

- Try drawing boxplots for each level of

- Perform a similar investigation of the diamond’s

carat.

- Draw plots to show how

pricechanges according to thecolorof the diamond. - Perform a similar investigation of the diamond’s

caratwithclarity. - What conclusions do you draw?

- Draw a scatterplot of the diamond’s carats against their price. What features do you see? You may need to modify your plot to deal with overplotting here.

- Try adding the

clarityas colour to your points. Does this expose any patterns? - What about

color?

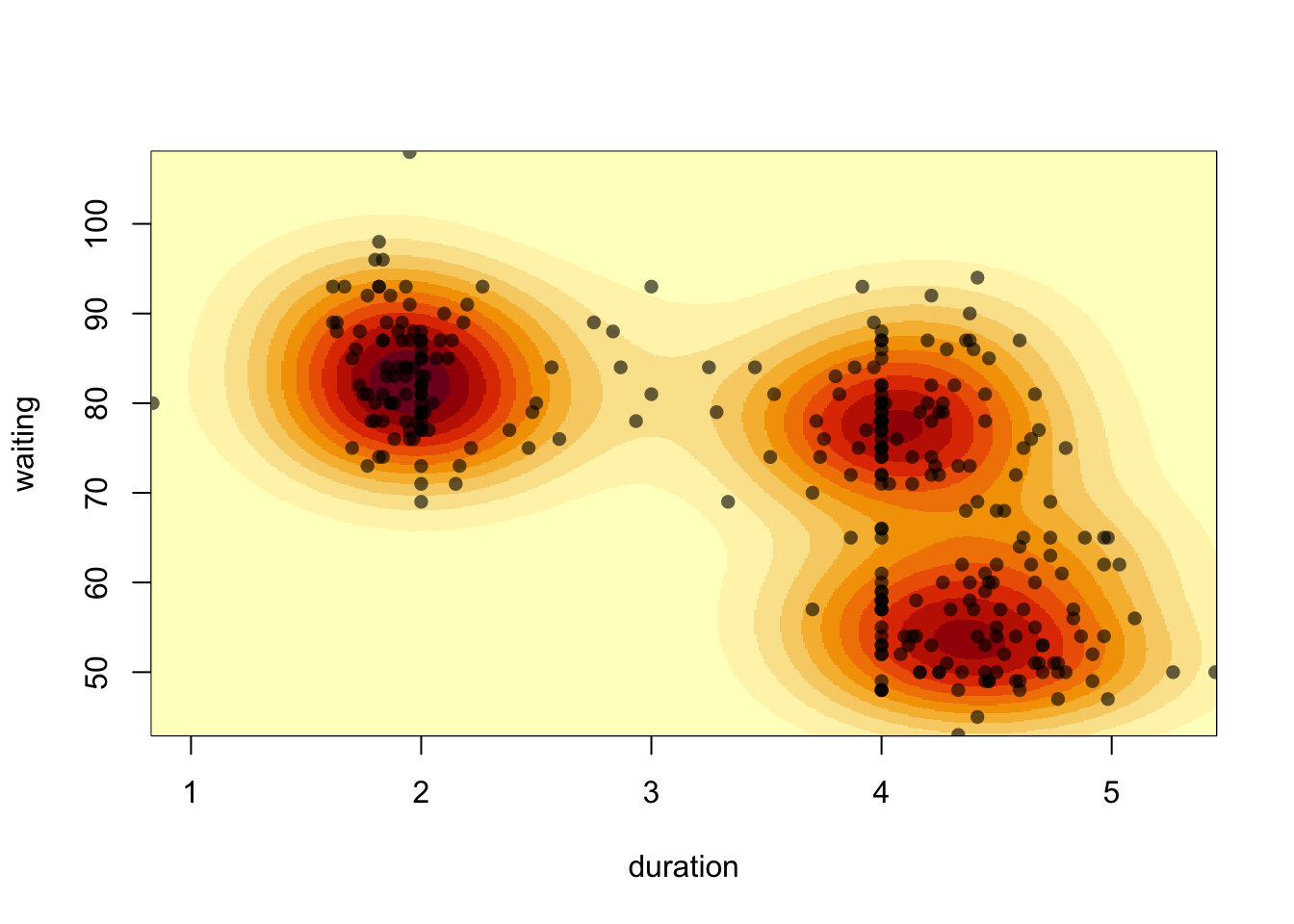

3 Extras: 2D Density estimation

The ideas of density estimation can be extended beyond one dimension. If we consider two variables at once, we can estimate their 2-d joint distribution as a smoothed version of a scatterplot. This also provides another alternative method of dealing with overplotting of dense data sets.

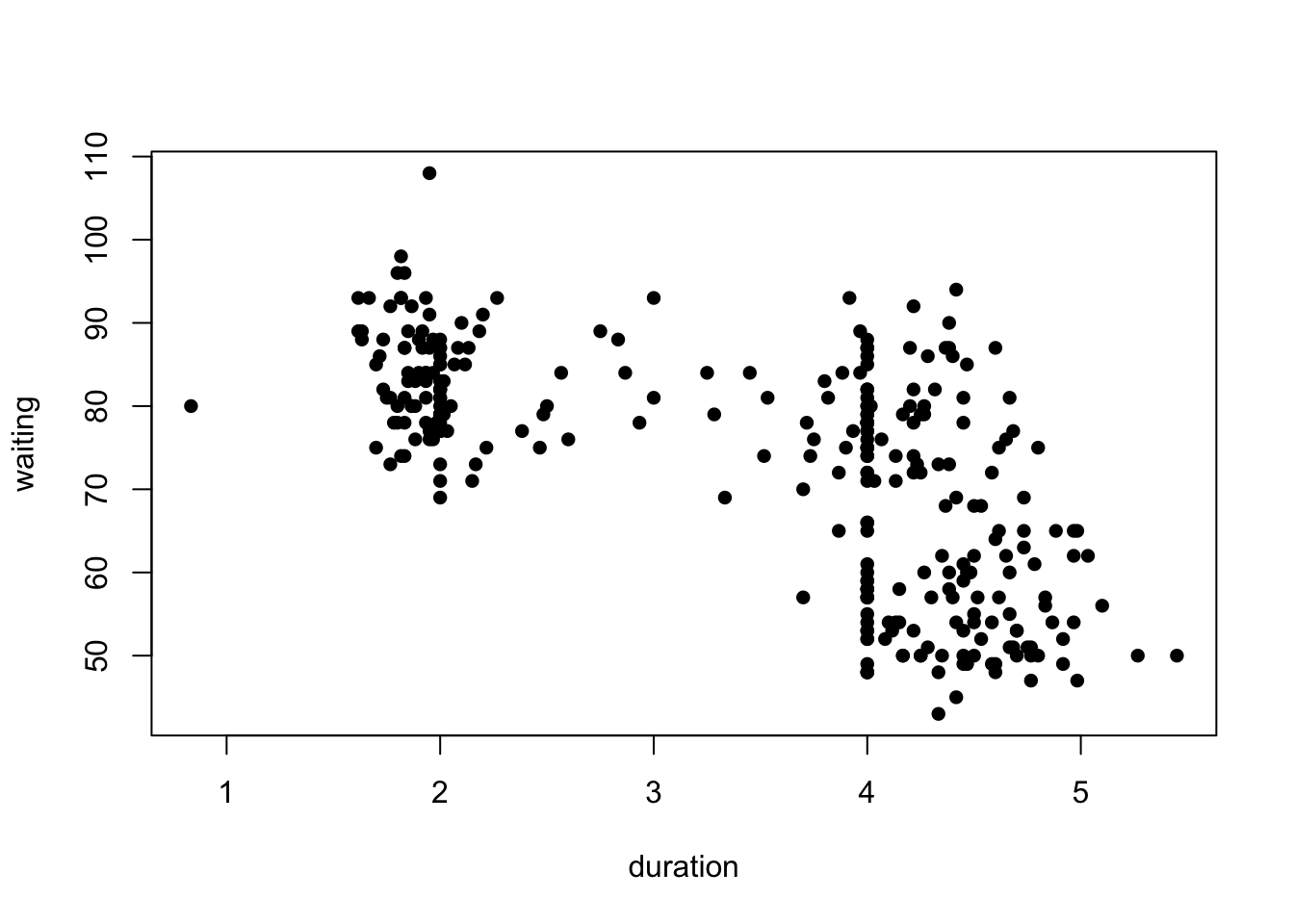

Let’s return to the Old Faithful data:

library(MASS) ## this is a standard package, you shouldn't need to install it

data(geyser)

plot(x=geyser$duration, y=geyser$waiting,

xlab='duration', ylab='waiting', pch=16)

kde2d function from

the MASS package. It has few arguments, just the

x and y to be smoothed, and an optional

n which controls the amount of detail in the resulting

smoothed density.

k1 <- kde2d(geyser$duration, geyser$waiting, n = 100)The output of kde2d is a bit more complicated than we

get from a 1-D density estimation. It’s now a list with x,

y, and z components. The x and

y components are the values of the specified variables

(here, duration and waiting respectively)

which are used to create a grid of points. The density value at every

combination of x and y is then estimared and

returned in the z component.

n here indicates the number of grid points

along each axis, effectively acting as an “inverse bandwidth”.

Increasing this will give you smoother output due to making more

estimates in a bigger z matrix, but will take longer to

evaluate.

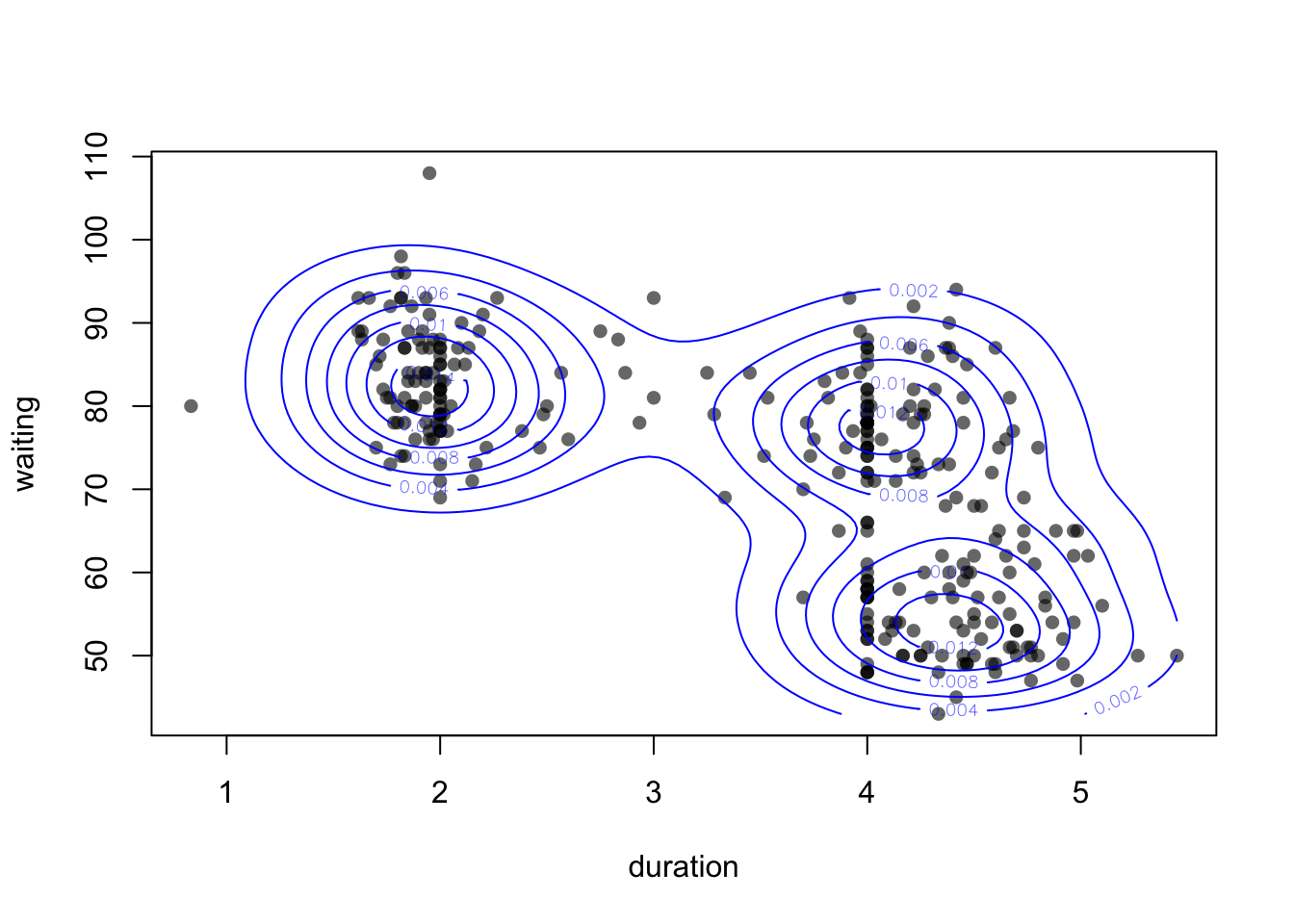

We can then add the smoothed density to our scatterplot as contours:

plot(x=geyser$duration, y=geyser$waiting,

xlab='duration', ylab='waiting', pch=16, col=alpha('black',0.6))

contour(k1, add=TRUE, col='blue')

Using add=TRUE we can draw the contours on top of an

existing plot. See the help on contour (?contour) for more

ways to customise.

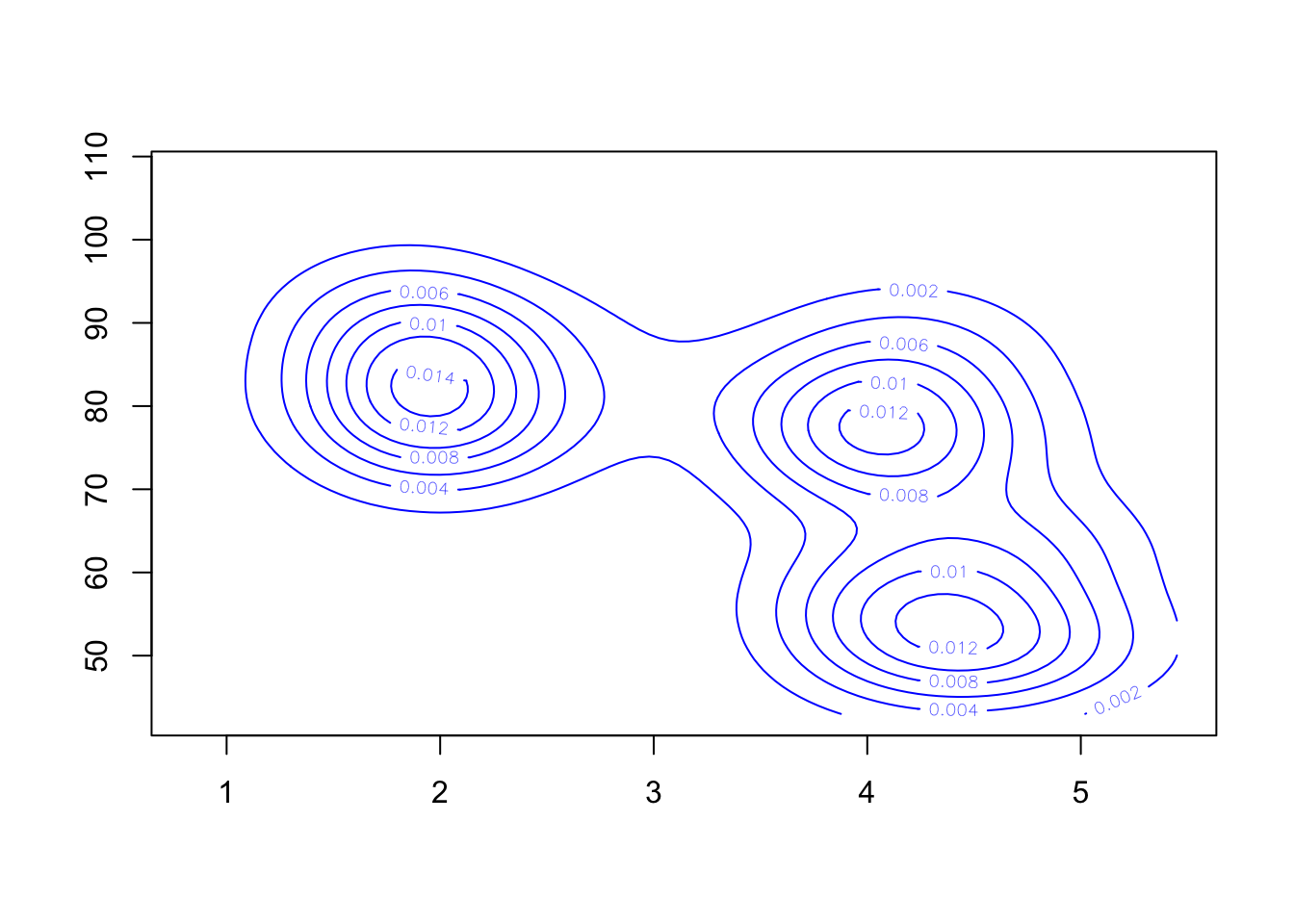

Alternatively, we could skip the scatterplot and just draw the contours (useful if we have too many points)

contour(k1, col='blue')

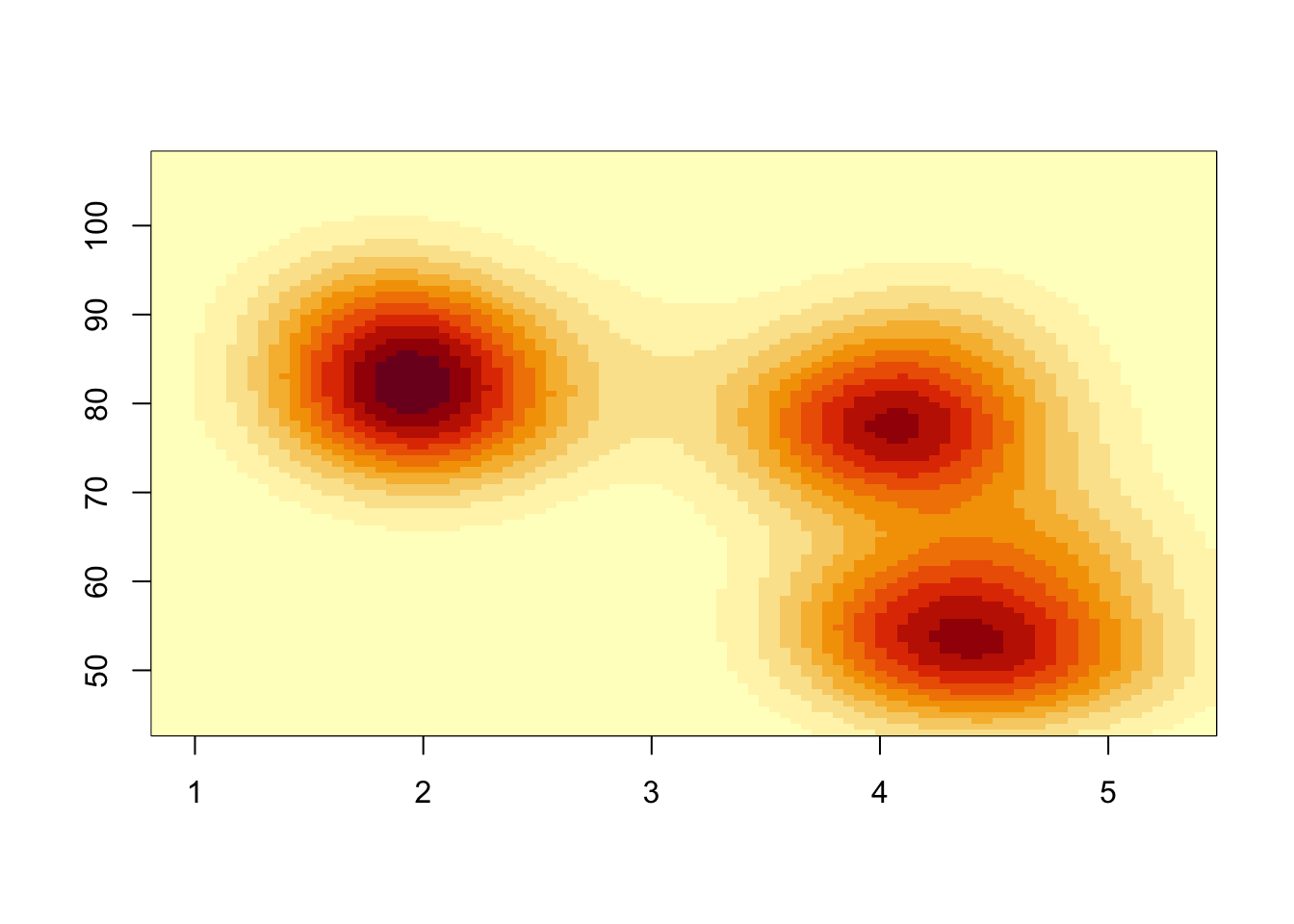

A different presentation of the density estimate is to draw the surface as a heatmap:

image(k1)

Each rectangular region in the plot is now coloured according to the

density estimate. The heatmap is a little blocky due to the resolution

of our density estimate. Specifying n=100 divides each axis

into 100 intervals, giving us 10,000 2-D bins over the range of the

data. Like a histogram, we can increase n to see more

detail - though it can take a little while to compute.

If we want to overlay the data points, we can use the

points function:

image(kde2d(geyser$duration, geyser$waiting, n = 500),

xlab='duration', ylab='waiting')

points(x=geyser$duration, y=geyser$waiting,pch=16, col=alpha('black',0.6))

4 More practice

4.1 Data set 3: Hertzprung-Russell

Download data: HRstars

The Hertzsprung-Russell diagram is famous visualisation of the relationship between the absolute magnitude of stars (i.e. their brightness) and their effective temperatures (indicated by their colour). The Hertzsprung-Russell provides an easy way to distinguish different categories of stars according to their position on the plot.

We’re going to look at theHRStars data which contain 6220

stars from the Yale Trigonometric Parallax Dataset.

- Let’s start with single variables. Have a look at histograms of

VandBV. - Do you see any evidence of groups in the data? You’ll need to adjust the number of bars.

Univariate summaries can conceal a lot of information when the data are inherently multivariate. So, let’s consider both variables.

- Draw a plot of the magnitude

Vvertically against the observed colourBV. - The data contain a lot of points, so reduce your point size by

scaling down the symbol size. This can be done by setting the optional

argument

cexto a value between0and1- the default size is1. - What features do you see? Can you identify any groups in the data? Are there any outliers?

- The Sun has an absolute magnitude of 4.8 and B-V colour of 0.66. Use

the

pointsfunction (it works exactly the same way aslines) to add it to your plot - use a different colour and/or plot character to make it stand out. - How does your plot differ from the plots you find on the web? How would you adjust your scatterplot to match?

The central ‘wiggle’ in the plot corresponds to main sequence stars. Below that wiggle are the white dwarf stars, and above are the giants and supergiants.

- Fit a 2-D density estimate to the stars data.

- Overlay the contours on your scatterplot (use a different colour and/or transparency).

- The

kde2dfunction takes an argumenthwhich represents the bandwidth. We can supply a single value, or a vector of two values (one for each variable).

- The contour plot seems to miss out the less dense region of dwarf

stars in the bottom left due to the low density of points. The default

number of contours drawn is 10, and it is set by the

nlevelsargument. Increase the number of contours until a contour around the dwarf group is visible. - Alternatively, you can pick the levels at which the contour lines

are drawn by specifying a vector as input to the

levelsargument. I found that \(0.005, 0.01, 0.025, 0.05, 0.1, 0.15\) work well - try this now. - Can you identify the main groups of stars?

- Create a new categorical variable to that represents the values of

the

Uncertvariable, which represents the parallax error in the observations. Use three levels:Uncertunder 50Uncertabove 50 but below 100Uncertabove 100

- Use your new variable to colour the points in your scatterplot according to this error.

- Is this parallax error associated with the properties (and hence types) of observed stars?

4.2 Data set 4: Pearson’s heights

Download data: fatherSon

This is another dataset of father and son heights, this time from Karl Pearson (another prominent early statistician). This time we have 1078 paired heights recorded in inches and were published in 1903. Families were invited to provide their measurements to the nearest quarter of an inch with the note that “the Professor trusts to the bona fides of each recorder to send only correct results.”

- Draw histograms of the sons’ and fathers’ heights

(

sheightandfheight). Use a density scale and a bar width of 1 inch. - Compute a density estimate for both distributions.

- Draw the histograms side-by-side and overlay your density curves.

- Do the data look normally distributed?

We should be careful about jumping to conclusions here, or as Pearson put it in his 1903 paper: “It is almost in vain that one enters a protest against the mere graphical representation of goodness of fit, now that we have an exact measure of it.” For the time, he was justified in his demanding both graphical and analytical approaches. Since those early days, analytic approaches have often been too dominant, both are needed.

- Draw Normal quantile plots of the two variables side-by-side. Do the data look normal?

- Draw a scatterplot of sons’ heights (vertically) against fathers’ heights (horizontally).

- Is there a strong association here? Calculate the correlation.

- Fit a linear regression (recall in ISDS, you used the

lmfunction). Add the line to your plot.

The height of a son is influenced by the height of the father, but there is a lot of unexplained variability. This scatterplot also illustrates a phenomenon known as regression towards the mean. Small fathers havs sons who are small, but on average not as small as their fathers. Tall fathers have sons who are tall, but on average not as tall as their fathers.

To explore further whether a non-linear model would be warranted, we could plot a smoother andt the best fit regression line together.- Fit a smoother to the data.

- Add it to your plot with a 95% confidence interval.

- Are the regression and smoother in agreement?