Statistics, Text Classification, and the Web - Jonathan Cumming

Description

It is estimated that there is about 1.8 zettabytes (1.8 trillion GB) of data today, and that 70-80% of that data is unstructured (text documents, notes, comments, surveys with free-text fields, medical charts). While that data is out there, the real problem is not the amount of information but our inability to process it. Unstructured text documents are particularly challenging for mathematical approaches since language is ambiguous, context-dependent and riddled with inconsistencies and other problems (synonyms, homographs, etc).

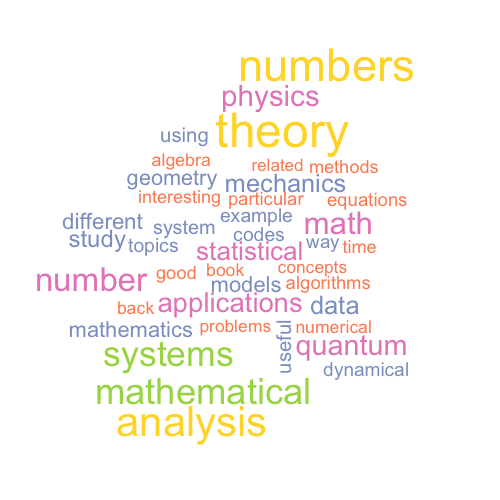

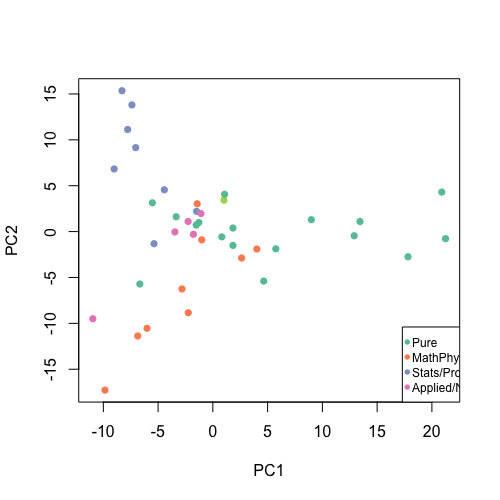

The first challenge to any modelling is to transform text information into an appropriate numerical data format that allows us to apply statistical techniques. The simplest approach is to turn a document into a vector of word frequencies, and so a collection of documents simply becomes a collection of observations of word counts over a particular vocabulary (though things are more complicated than that). For example below, we have taken the text of the Project 3 descriptions from 2015 and reduced each topic to a vector of word frequencies. After some simple processing and normalising, we can represent the most common word frequencies graphically (left), or as a projection into a 2-D numerical space (right). Even using such a simple and aggressive dimension reduction, we begin to see separations between topics on different subjects based on word usage. A more sophisticated version of this representation is illustrated by methods such as word2vec which attempts to capture both information on what words were used in the document, but also to position words with similar meanings close together in the vector space.

Word cloud (left) for 2015's Project 3 topics based on word frequency, and (right) 2-D projection of topics by area of mathematics.

Once reduced to numerical form, we can apply standard statistical methods to build models, or find clusters, or predict the type or topic of new documents, and so on. Our goal in this project will be to apply these techniques to webpages in order to identify classify the pages according to their textual content. We will begin by studying how we can treat textual information as data, which we can understand and model with appropriate statistical techniques. From there, we will consider:

- Clustering and classification - identifying and predicting the category of a document based on numerical summaries of the text data. In particular, recursive partitioning methods such as classification and regression trees.

- Topic modelling - i.e. building a formal statistical model for the category of the document based on its topic, and the latent Dirichlet allocation model.

- Investigating different statistical models for language, such as bag-of-words and n-grams.

For this project, we will be looking at real data supplied for this project by Clicksco. This project has a focus on data analysis and statistical computation, therefore familiarity with the statistical package R, general statistical concepts, programming skills, and practical data analysis are essential.

Prerequisites/Corequisites

Statistical II, Statistical Methods III.

Further information

- Linked wikipedia pages in the text above.

- Foundations of statistical natural language processing (or similar), available in the Main Library.

- Text mining and topic models

- Bag of Words Meets Bags of Popcorn - a tutorial on natural language processing using IMDB film reviews (more machine learning than statistics, however).

- The R Task View on natural language processing overviews useful packages in this area.